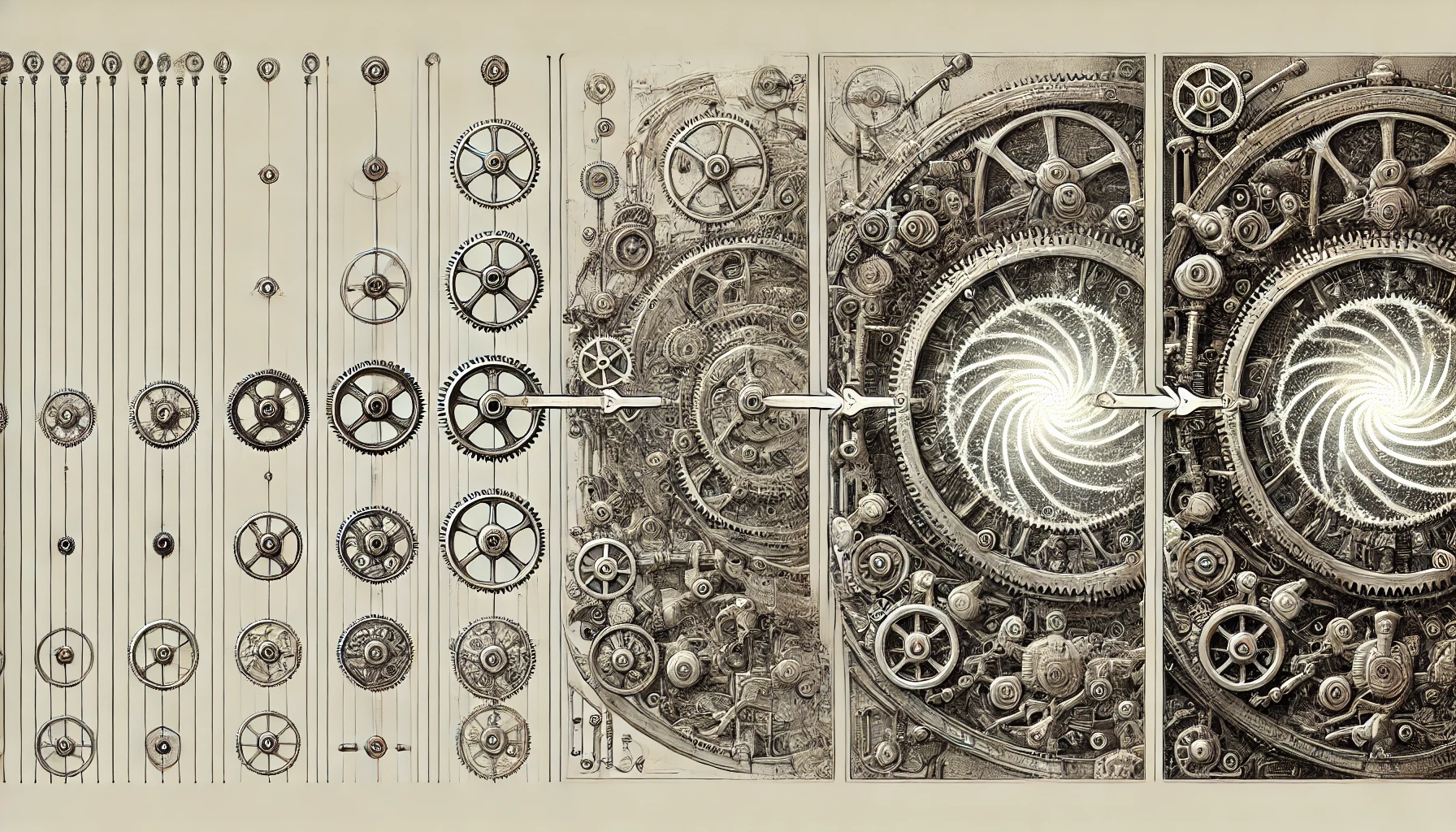

Illustration by DALL·E

Scaling up

A second source of our unpreparedness for an artificial intelligence better than ours is that the very way the overtaking occurred was unexpected being almost entirely due to a process of gain in size – the extra element being the ‘transformer’.

The route to synthesising human intelligence which turned out to be the right approach was counter-intuitive: the early prototype of an artificial neuronal network, the perceptron, was still only a moderately promising device. This elementary type of a connectionist model was harshly criticised by two stars of the early artificial intelligence research field: Marvin Minsky and Seymour Papert, who devoted a book to demonstrating that connectionist approaches had no future. Their opus Perceptrons was published in 1969 but, arriving 1988, the authors were still unrepentant of their condemnation. After suggesting in the new prologue to a reprint of their book that connectionism was likely to be nothing more than a fad: “Did the new interest reflect a cultural turn toward holism?”, were they asking. They added: “the marvellous powers of the brain emerge not from any single, uniformly structured connectionist network but from highly evolved arrangements of smaller, specialised networks which are interconnected in very specific ways” , a statement that remains true overall but which, as far as the ability to generate meaningful sentences is concerned, was discredited by the success of Large Language Models (LLMs).

It was indeed a source of amazement that all the remarkable properties of the human intellect would emerge one after the other out of a mere scaling up of the artificial neuronal networks built to attempt simulating the human psyche: scaling up of their architecture in terms of number of artificial neurones, scaling up in the amount of data they would be fed during their pre-training phase.

Over the centuries we had painstakingly accounted for linguistics and its apparently separate compartments such as syntax, semantics, pragmatics, logic and decision-making, by constructing mathematical models of the behaviour we were observing, as if any one of these behaviours resulted from the application of a specific set of rules. However when we started summoning these sophisticated models in our attempts at building artificial intelligence projects we went from disappointment to disappointment: every time we thought the goal was within reach we realised that additional rules still needed to be thought up and the work in progress, a by then already hyper-complex machine, had to be put back on the workbench.

The explanation, which we have now grasped with the benefit of hindsight from the LLM experiment, is that applying a myriad of rules is simply not the way our brains work with language. Linguistic skills have apparently improved in performance and capability for no better reason than a larger and more closely connected corpus of learning data, with new stunning features springing up through self-organisation as part of an unexpected emergent process. Unfortunately, such emergent phenomena are opaque and unpredictable to the human mind due to a number of features of systems growing in size and complexity, such as the low predictability of non-linear processes, the intricacy of fractal boundaries between basins of attraction of phase spaces in dynamical systems, and the rapidly diverging paths in the dynamics of such complex systems however similar these might have been in their initial conditions.

Illustration by DALL·E

2 responses to “A NEW “US” FOR NEW TIMES. XIII. Scaling up”

“the marvellous powers of the brain emerge not from any single, uniformly structured connectionist network but from highly evolved arrangements of smaller, specialised networks which are interconnected in very specific ways” , a statement that remains true overall but which, as far as the ability to generate meaningful sentences is concerned, was discredited by the success of Large Language Models (LLMs)

Can the LLM exactly represent just one of the smaller, specialised network they were talking about?

LLMs deal essentially with language but seems amenable to graphics as well. To such an extent that one may wonder why specialised networks might be needed at all. Maybe it’s just a historical thing, I mean phylogenetic, reflecting the way things developed in evolution.