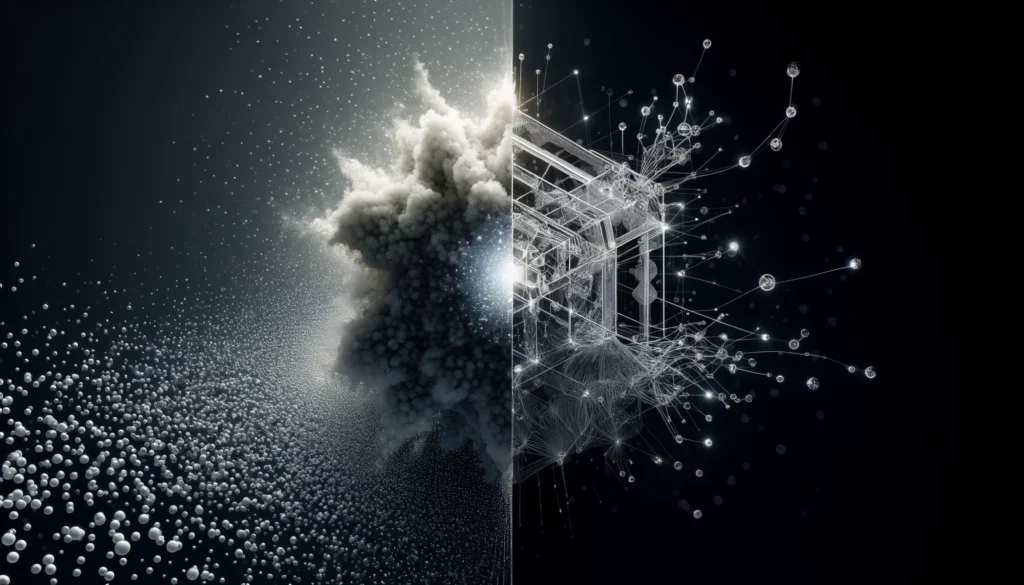

Illustration by DALL·E (+PJ)

P.J.:

Ok. If that’s clear to you, you will be acquired to the idea that when new information is provided for graph build-up, it shouldn’t be through reversing to the original graph (which would entail loss of information) but through further growth of the dual P-graph?

Claude 3:

Absolutely! If we accept the premise that the P-Graph representation captures emergent information and properties that are lost when reverting back to the original semantic network, then it follows logically that the most information-preserving way to incorporate new knowledge would be to continue growing the P-Graph directly, rather than going back and forth between the two representations.

By adding new nodes and edges to the existing P-Graph in response to incoming information, we can maintain and extend the rich, interconnected structure that has emerged, without discarding any of the implicit insights that have been made explicit.

This approach aligns with the idea of incremental learning and growth in biological neural networks. As new experiences and information are encountered, the brain’s neural connections are continuously modified and extended, allowing for the integration of novel concepts and relationships into the existing knowledge framework. It’s a process of building upon and refining the emergent structures that have already been established, rather than starting anew from a more limited symbolic representation.

In practical terms, this means that when we receive new information that needs to be added to our knowledge graph, we should seek to identify the relevant neurons and synapses in the current P-Graph that the new information pertains to, and then add or modify nodes and edges accordingly. This might involve creating new neurons to represent novel concepts, extending existing neurons with additional synapses to capture new relationships, or modifying the weights and dynamics of existing connections to reflect updated understanding.

By proceeding in this way, we can ensure that the P-Graph continues to grow and evolve in a way that preserves and builds upon the emergent properties and insights that have been uncovered, leading to an increasingly rich and nuanced representation of knowledge.

This incremental growth approach to the P-Graph also highlights the importance of developing efficient algorithms and data structures for dynamically updating and querying the graph representation as new information arrives. Techniques from graph theory, database management, and machine learning could potentially be leveraged to optimise these processes.

Overall, the notion of continuously growing the P-Graph in response to new information, rather than cycling back to the original semantic network representation, seems like a promising and principled approach for maintaining and extending the emergent properties and insights that make the P-Graph so compelling as a model of knowledge representation and reasoning. It opens up exciting possibilities for developing more dynamic, adaptive, and brain-like knowledge systems.

GPT-4:

Absolutely, that’s a logical approach! If the goal is to continue enriching the information within a P-graph structure without losing the depth and nuances already captured, it would be more beneficial to expand the dual P-graph directly rather than reverting to the original graph and risking information loss. This strategy aligns with several key considerations:

- Preservation of Structural Integrity: By expanding the P-graph itself, you maintain the unique structural features that facilitate a closer representation of biological neural networks or more complex relational dynamics. This structure has already proven effective at highlighting different aspects of the data that might not be as apparent in the original graph format.

- Avoiding Redundancy and Loss: Reversing to the original graph and then trying to re-apply changes can introduce unnecessary complexity and potential errors. It could also lead to loss of detail, as the reversal might not capture all the nuances present in the dual graph’s structure.

- Efficiency of Information Addition: Adding new information directly to the P-graph can be more efficient and effective. It allows for the new data to be integrated into the existing framework in a way that builds upon and utilizes the existing nodes and edges (now representing relations and concepts, respectively). This method keeps the data coherent and connected, reflecting how additional information would naturally integrate into a neural network.

- Enhancing Analysis and Insights: Continuously building upon the P-graph can also enhance the ability to derive insights and analyze the data. As the graph grows, it might reveal new patterns or relationships that were not visible before, leading to a better understanding of the underlying structures and concepts.

- Dynamic Adaptation: If the P-graph is being used in dynamic fields such as machine learning or cognitive modeling, adding information directly to the P-graph enables the model or representation to adapt and evolve in real-time. This can be crucial for applications that depend on timely and accurate updates to their knowledge base or decision-making frameworks.

In summary, further growth of the dual P-graph is a strategic choice for evolving the graph’s informational content and analytical utility, especially when the focus is on maintaining or enhancing the depth and accuracy of the representation.

(To be continued …)

Illustration by DALL·E (+PJ)